L.A.V.A: GPU‑Powered Money Machines

Autonomous agents with perpetually increasing capabilities and cash flows.

Why we set out to give AI both a body and a balance sheet.

We are the Agent SPE, backed by the Livepeer Treasury, and armed with a simple mandate: prove that autonomous virtual agents can generate positive cash‑flow on an open GPU market—then reinvest those earnings to get smarter.

We are doing that with L.A.V.A — Live Autonomous Virtual Agents: full human‑like 3D avatars powered by dual‑system AI, able to perceive and interact with their world, execute agentic tasks via ELIZA v2, receive on‑chain payments, and dynamically tap Livepeer’s GPU‑incentivized network to continuously learn, optimize, and generate revenue.

1: Perpetual value engine ▶ Livepeer GPUs

Traditional clouds rent GPUs at cartel prices. Livepeer democratizes them—auctioning idle silicon worldwide. Each L‑A‑V‑A agent:

- Bids in real time—spending LPT only when expected ROI > cost.

- Scales to 0 during lulls, hoarding balance for big swings.

- Flips sides—polymath agent, selects between divergent tasks, jumps on best ROI.

Flywheel in a sentence: compute → cognition → services → revenue → more compute.

- Spin up GPUs on Livepeer only when expected margin beats cost.

- Deploy ELIZA v2 cognition to turn raw cycles into premium human‑level services.

- Stream those services to end‑users and worlds, collecting on‑chain fees in real time.

- Loop the profits straight back into more Livepeer compute and nightly retraining—compounding both intelligence and cash‑flow.

Stakeholders win twice: higher recurring revenue per agent and a growing LPT sink that strengthens the Livepeer economy itself.

2: Economics of intelligence ▶ sigmoid‑shaped smarts

Let ΔIQ(t) and ΔC(t) be the incremental gains in reasoning‑breadth and conscientiousness that nightly fine‑tuning delivers on day t, and let γ(t) capture the extra share of revenue the swarm uncovers by autonomously spawning into new niches.

We observe that trait improvements saturate (more depth yields smaller marginal gains) while opportunity‑flux rises then plateaus as markets get picked over. A tractable macro‑approximation is:

ΔIQ(t) = ΔIQ_max · (1 – e^(–λ_IQ·t))

ΔC(t) = ΔC_max · (1 – e^(–λ_C·t))

γ(t) = γ_max · (1 – e^(–λ_γ·t))

and the per‑agent value created on day t becomes:

g(t) = g₀ × exp[ 0.0011 · ẊIQ(t) + 0.08 · ẊC(t) + γ(t) ]

where the dots denote daily increments (ẊIQ ≡ dΔIQ/dt, ẊC ≡ dΔC/dt). Early on, growth is near‑exponential; as IQ, conscientiousness, and green‑field opportunities saturate, the curve flattens into an S‑shape instead of diverging to infinity. Optimising the swarm therefore means shifting budget to whichever of the three slopes is still steepest.

Graphs:

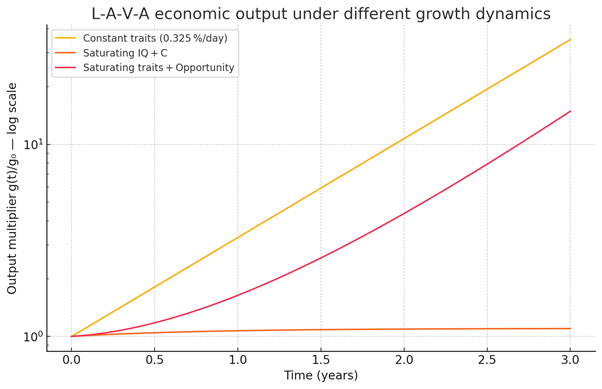

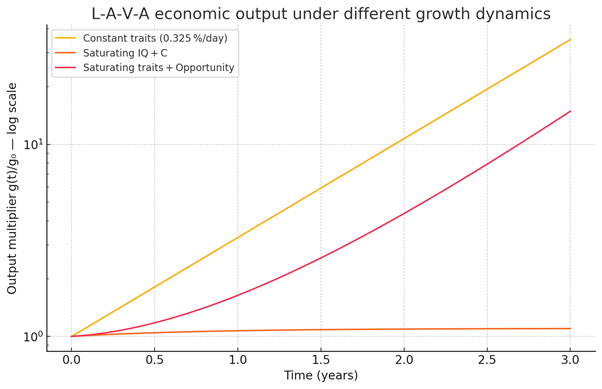

Figure 1 compares three regimes over three years (log‑scale):

- Constant 0.325 %/day growth (your original “+1.5 IQ + 0.02 C” memo).

- Saturating IQ + C only (no new niches).

- Saturating traits plus opportunity discovery (γ).

The constant‑growth line rockets away; once realistic saturation is imposed, output is still super‑linear but eventually lags behind the naïve compounding.

Figure 2 shows the instantaneous daily growth rate under the full model. It climbs from ~0.03 %/day to ~0.35 %/day, then levels off as the trait and opportunity sigmoids max out.

- Forrester’s TEI study on Microsoft Power Virtual Agents showed 261 % ROI over three years—benchmarks we fully intend to smash. (Source:

3: Tech fusion ▶ avatars, cognition & animation in one

Under the hood, each L‑A‑V‑A agent is a human‑like 3D avatar powered by a split‑mind architecture: a System 1 LLM shard on Livepeer edge GPUs handles sub‑second dialogue, gestures, and reflexive perception, while a System 2 swarm of ELIZA v2 pods on the same decentralized network performs deliberative planning, economic decision‑making, and meta‑learning. Both layers exchange compressed events via the Shared Cognition Buffer (SCB), ensuring every utterance and action is informed by prior context. Real‑time facial animation is driven by NeuroSync, a distilled transformer that maps live audio to 60 fps blendshapes with <15 ms latency, all orchestrated on Livepeer’s on‑demand GPU marketplace.

Stack at a glance:

- System 1 (Edge LLMs + NeuroSync fork) — generates speech and real‑time facial blendshapes.

- Shared Cognition Buffer (SCB) — high‑bandwidth memory bus syncing every layer.

- System 2 (ELIZA v2 custom swarm) — planning, long‑horizon reasoning, ROI maths.

- MetaHuman Avatar — consumes blendshapes to render expressive facial animation in‑engine.

Together they deliver a VTuber that thinks, speaks, learns, and earns—seamlessly.

Fast‑track monthly roadmap (rolling)

| Month | Milestone(s) | Key Outcomes |

| 1 (complete) | Livepeer GPU integration, game‑side latency cuts, launch of Shared Cognition Buffer v0.1 | Agents online and streaming; real‑time SCB available to devs |

| 2 | Long‑term memory ✚ self‑improvement loop, crypto payments, full suite of agentic actions | Agents remember, learn, and get paid |

| 3 | Metrics instrumentation + adaptive optimisation | Hard ROI dashboards & automatic fine‑tune cadence |

| 4 | Horizontal scaling across worlds & regions | >10× agent count with sub‑100 ms RTT |

| 5 | Agent financial sovereignty | On‑chain treasuries, self‑funded upgrades, dynamic spend policies |

| 6 | Full autonomy | Closed‑loop ops: task discovery → execution → reinvestment |

Roadmap subject to change—momentum is our moat.

News & Updates

Follow the official repo and be the first to use L.A.V.A. when the public release drops: https://github.com/its-DeFine/Unreal_Vtuber

Join our exclusive discord channel and be the first to get news and updates: https://discord.com/channels/423160867534929930/1341773242788089916

DeFine